It is an exciting time to be a designer. Emerging technologies, from machine learning to artificial intelligence and self-driving vehicles, make us question what we know about interfaces. We are still at the very beginning of the AI journey and do not yet fully understand the impact of our design decisions. As Josh Lovejoy, user experience designer at Google, recently put it: “No-one really knows how to do that UX for AI thing yet. (…) We know just enough to know that the space is in desperate need of multi-disciplinary collaboration.”

At Stanley Robotics, we fully embrace problem-solving through design thinking and empathic design. Engineers, service operators and product managers are the experts. It is crucial to understand their way of reasoning and perception of the system and we have found it especially important to integrate them into the design process of each of our projects. As a result, we have been busy testing popular methodologies and have adapted them to the particularities of designing for self-driving vehicles and artificial intelligence.

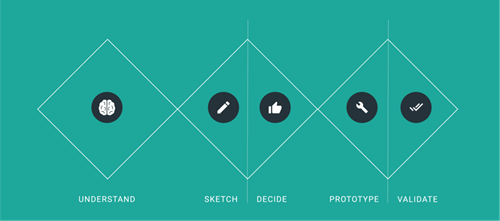

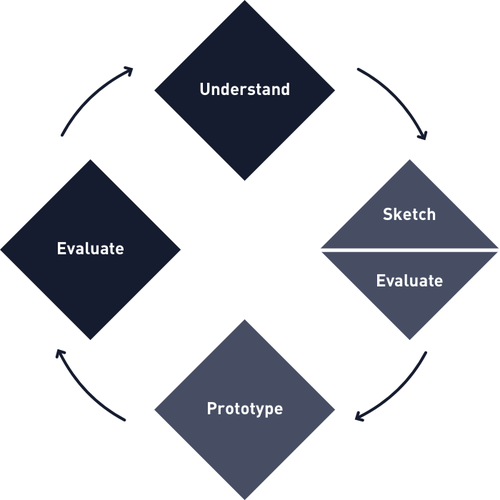

Be it the User-Centred Design Cycle or the Double Diamond, they all follow, more or less, the same pattern. First, we research with the aim of understanding the users, their needs and viewpoint. Then, a problem space is defined. Finally, we sketch ideas, prototype and test before we start anew and iterate.

While they are user-centered, which is a good thing, they are also designer-centred and often fail to bring forward methods which work well in cross-functional teams. Even though they are business-centric, we have found that thinking in terms of Google’s infamous Design Sprints proved to be a good place to start building our own design process model.

The difference in our own design process is that there are phases which are specifically intended for designers, engineers, service operators, developers and product managers. By that we mean that we do not only interact with them, but they actively take part in the design process. In addition, we are an agile company. Using a model which is designed for iterative processes is critical.

During the first phase, just like in any other model, we aim to understand the problem space. We hold interviews and observe people when they are working. However, we also hold what we call a kick-off workshop. Participants come from all teams involved; through a number of exercises, we make sure that the problem space, as the defined by us designers, aligns with everyone’s needs.

One notable type of exercise which has delivered exceptional results is what we call “Draw It Like I See It”. Before the workshop, we come up with 2 - 3 topics we would like to clarify and dig into deeper. Similar to Crazy Eights, the goal is to draw; however, we ask people to draw how, in their mind, a certain functionality works. This might be an interface or a component, but it does not have to be.

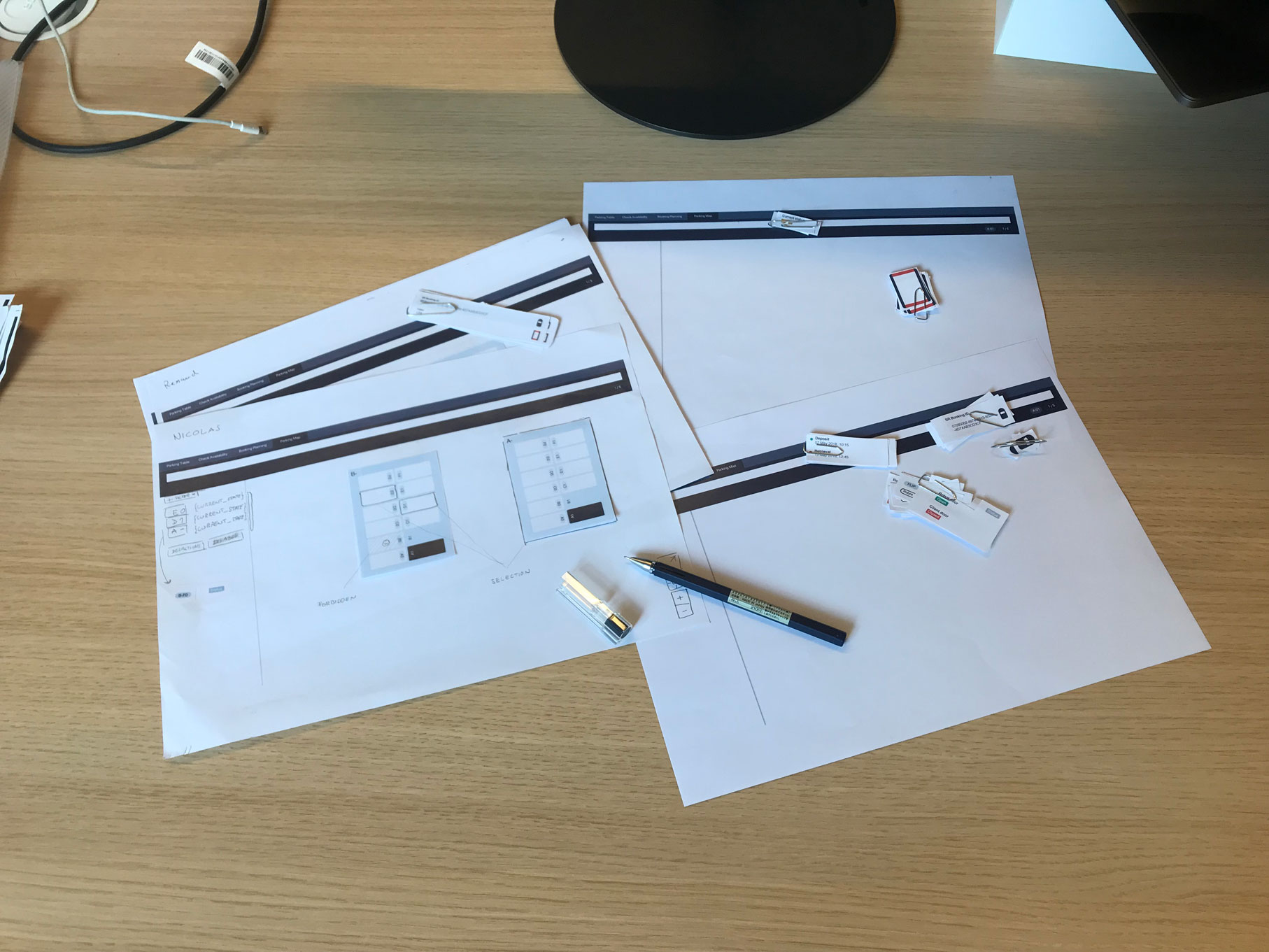

The goal is simply to foster understanding throughout the team. Equally, for established interfaces, we have started to carry interface kits to all our meetings which include all interface components in paper form. This way, components can easily be moved around, drawn on, cut up and reassembled. They help communicate ideas quickly.

Now one might ask: why do we need to work with engineers? What is different in autonomous vehicles and artificial intelligence compared to traditional systems? When someone from the technical world would describe programming to someone with little knowledge about it, they could describe it as ‘using a language to tell a computer or a machine what to do’. Even if abstract, this is understandable.

The difference with artificial intelligence is that it is not only the developer who tells the machine what to do, but the machine starts to take decisions on its own. A rather complex concept, raising a lot of questions: How does that work? In how far is the machine autonomous? What if things go wrong?

While users work with a system, they build up what designers and psychologists call a ‘mental model’. It is a user’s own explanation of how a system works. It helps them interact with the system and is used to decide on what to do when things go right, but especially when things go wrong. It is important to understand what a user’s mental model looks like, not just when designing for artificial intelligence or self-driving vehicles, but for any system.

It is only then that we can start developing interfaces which are tailored to the needs of a user. Engineers and service operators, having full understanding of how everything is interconnected, can then help the designer translate that knowledge to a ‘conceptual model’, the picture that the designer would like to paint of the system and which helps users to build a mental model that works well.

Once we know how engineers see the system behind the interface we are developing, we are more concerned with our users. The second and third phases focus therefore on what we as designers do best: ideate, evaluate and prototype iteratively. We use the usual techniques to do so: sketching, wireframing, paper and medium-fidelity prototyping. With so many different user groups, it might become difficult to see how they differ from one another. To align their goals we have become big supporters of job stories.

For those familiar with user stories, they are a tool for describing the goals of a user in an interface. Job stories are different in that they focus on the situation, motivation and expected outcome, rather than extraneous details.

Finally, in phase four, we test our interface with both engineers and service operators. We tend to give them a task, as discovered during the first phase, to go through using our interface. As they use the interfaces in different situations, it is always interesting to see what happens when they are presented with the same task. Does the conceptual model correspond to both mental models? To what extent are they different? Where does this difference originate? The answers let us iterate again and again until we find a solution that ticks all the right boxes.

When designing an interface for a system which is built on artificial intelligence, it is tempting to start with one interface and work one’s way through the others. However, if there is one piece of advice that we can give you: do not go down that road. Instead, take your time to gain a holistic picture of what the task of each interface is and try to understand how the system works as a whole. The idea is to build one’s own mental model the same way an engineer or other related parties would, leading to deeper understanding, better products and results - right from the beginning.

About Stanley Robotics

Stanley Robotics is a venture-backed company that was created in January 2015, and is  headquartered in Paris, France. It is developing an automated valet parking service with robots that can move any vehicle and increase capacity in existing car parks by up to 50%, while revolutionizing the user experience.

headquartered in Paris, France. It is developing an automated valet parking service with robots that can move any vehicle and increase capacity in existing car parks by up to 50%, while revolutionizing the user experience.

The three founders, Clément Boussard (CEO), Aurélien Cord (CTO) and Stéphane Evanno (COO), all have previous experience in driverless technology (at top research institutes and with a world-class industrial corporation).